A byte (B) is the smallest group of information that a computer can process in one chunk or bite. Yes, the 'byte' did originally mean a 'bite' but it was changed so a Brazil-like error (see the movie) can't transform it into the 'bit'.

The bits in a byte are processed together and more importantly for us the byte is presented to us in a form that we can understand. Each byte can be encoded to correspond to a digit, a letter of the alphabet, a punctuation character or other symbol.

How many bits are in a byte?

"How many bits are in a byte?" is an important question that has no definitive answer although it is commonly accepted that there are 8-bits in a byte.

The answer often used to depend upon the computer hardware in question because a byte is a set of adjacent bits that are processed as a group, a chunk or "bite". Computers in the 1960s and 1970s often processed in "bites" of 7-bits or less. Nowadays consumer computers usually use bytes of 64-bits, 128-bits or more but we don't usually call them bytes.

The commonly held meaning of a byte arose from the need to encode alphabetic characters so we could understand computer output. The 8-bit extended ASCII code was developed for this purpose and led to the common acceptance of an 8-bit byte because a character in a document was normally stored in 8-bits. More recently this definition has gained the support of the international standard ISO/IEC 8000-13:2008. This document recommends that a byte should have 8-bits to avoid confusion. But the standard is not definitive and simply supported the common understanding:

- it does not make the 8-bit byte an international standard.

- it acknowledges that the correct term for 8-bits processed together is an octet.

- it does not make the letter "B" an international standard abbreviation for a byte because B represents the bel, a measure of sound intensity as used in the word decibel.

That is enough information to understand what a byte is. Read on if you want more understanding of byte prefixes and the uses, history and future of the 8-bit byte.

The byte is used for characters to communicate with us

There is one particularly important reason why we need a byte. The byte standardizes the characters we need to communicate with a computer system and other users of computer systems.

A binary computer has no inherent need to work with a byte. People design computers to use bytes because they make it easier for us to work with computers. We can't make sense of streams of 0s and 1s so we want a language that we can understand. That means the computer has to convert it's chunks of information into characters that we can make sense of: arabic numbers (0 to 9), English language including punctuation, plus whatever other codes I need to communicate. For example, when I type on the keyboard I need to send a control code for the end of a line so their is encoding for that.

The byte is used as a measure of computer storage

The byte is also used as a measure of computer storage. Here's where it gets a bit more complicated.

Have you ever bought a disk and found that it wouldn't fit your data even allowing for the space required to organize the disk? The manufactureers of computer memory and computer disk drives use different measures for the same term. Computer memory is marketed with a binary multiple, disks are marketed with a decimal multiple. The differences between the two measures were small but they increase with each new order of magnitude. A kilobyte of memory is only 2% more than a kilobyte disk but a terabyte of memory is 10% more than a terabyte disk.

Once your memory and disks are installed in your computer, the operating system and your applications will generally use the same measure. Just be aware that some programs don't. The easy way to check is to compare the number of bytes with the multiple displayed.

The following table illustrates those differences. I'm not advocating that you use the IEC terms which have not caught on anywhere - I'll bet you've never heard of them. You just need to be aware that the decimal names are used interchangeably with the binary names even though they measure different values.

Just in case you're thinking that disk drive vendors are trying to rip us off, they're not. Storage media such as disk drives is not binary unlike computer CPUs, memory, and other supporting hardware. Also, they are more technically correct: SI units are decimal. That's why the IEC solution is to give distinct names for the binary multiples.

| Byte multiples | |||||

|

Binary e.g. CPU, memory, RAM |

Decimal e.g. hard disks |

||||

| IEC Name |

Binary Base-2 |

Decimal Base-10 |

SI Name |

Binary Base-2 |

Decimal Base-10 |

| kibibyte (KiB) | 210 | 1,024 = 1.02x103 | kilobyte (kB) | na | 1,000 = 103 |

| mebibyte (MiB) | 220 | 1,048,576 = 1.05x106 | megabyte (MB) | na | 1,000,000 = 106 |

| gibibyte (GiB) | 230 | 1,073,741,824 = 1.07x109 | gigabyte (GB) | na | 1,000,000,000 = 109 |

| tebibyte (TiB) | 240 | 1,099,511,627,776 = 1.10x1012 | terabyte (TB) | na | 1,000,000,000,000 = 1012 |

| pebibyte (PiB) | 250 | 1,125,899,906,842,624 = 1.13x1015 | petabyte (PB) | na | 1,000,000,000,000,000 = 1015 |

| exbibyte (EiB) | 260 | 1,152,921,504,606,846,976 = 1.15x1018 | exabyte (EB) | na | 1,000,000,000,000,000,000 = 1018 |

|

zebibyte (ZiB) |

270 | 1.18x1021 | zettabyte (ZB) | na | 1021 |

Some of these numbers prefixes that we're talking about are relatively recent. The peta- and exa- prefixes were internationally recognized in 1975, the zetta- prefix in 1991.

Check out for yourself how characters are encoded in bytes

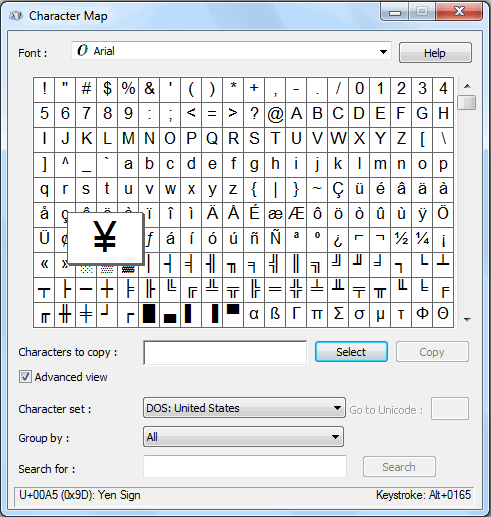

You can have a look at how specific characters are encoded by using Windows Character Map. You will notice that hexadecimal and decimal values are used but not binary values.

From the Start Menu, select 'All programs', select 'Accessories', select 'System Tools', select 'Character Map'.

When the Character Map window appears, tick 'Advanced View' so you can choose a character set. The first font Arial and I've chosen the 'DOS: United States' character set which mimics the default for the original IBM PC..

I've selected the Yen character which is enlarged for viewing. The status line displays a couple of hexadecimal codes "U+00A5" is the Unicode value. "0x9D" indicates a hexadecimal number by having the "0x" prefix so the character number in this character set: 9D16 = 15710= 100111012. Because the two codes are different I know that Unicode will use at least two bytes to store this character. If they were the same then this would be a single byte character.

Why has the 8-bit byte become a de facto standard?

A byte is normally 8-bits for five reasons that were mainly decided during the birth of modern semiconductor computers in the 1970s:

- The primary reason is that 8-bits has been the lowest common denominator for most computer systems since the 1970s. Other CPU byte sizes, particularly 18- and 36-bit processors (which provided 10 decimal places of accuracy), have disappeared and now the computer world almost exclusively uses multiples of 8-bits: 8-, 16-, 32-, 64- and eventually 128-bit.

- CPUs: The first commercial microprocessors in the early 1970s were 4-bit but the next generation in the late 1970s were predominantly 8-bit when companies such as Apple and IBM provided computers for the masses.

- CPU support hardware: Circuit boards and peripheral hardware were comparatively expensive in the 1970s so new systems were designed to use existing 8-bit hardware. The IBM PC used the Intel 8088 CPU, basically a 16-bit 8086 CPU with the 16-bit external data paths reduced to 8-bit so cheaper and widely available hardware could be used. That's why we talk about x86 architecture and compatibility rather than x88.

- Operating systems (OS): 7-bit ASCII encoding was the new standard in the 1960s but by the time it was internationally accepted there were already many variants. In the early 1960s, IBM introduced 8-bit EBCDIC encoding. So once 8-bit computing became common, it was natural for character encoding to follow. In the late 1970s, UNIX was expected to become the dominant operating system and UNIX's C programming language originally required a minimum of 8-bit character types. It was quite a surprise when the IBM PC-based operating systems became ubiquitous (PCDOS or MSDOS) but they too used 8-bit character encoding (though ignoring EBCDIC): the 7-bit ASCII Character Set (128 characters) plus the 8th bit for Extended Character Sets (also called code pages) to support other languages. Windows being based on DOS followed the same path so the standard Windows data type for Byte remains 8-bits.

- Networking: I've added this fifth reason to provide another perspective on the continuity of the byte. Modern digital telephony standardized on 8-bits in the early 1960s and this formed the basis for many future networking developments including the Internet.

Will the byte eventually become 16- or 32-bit?

The 8-bit byte has largely remained for efficiency and it is hard to see larger byte sizes becoming a new standard any time soon. As the transition from the QWERTY keyboard layout has shown - well, it hasn't actually happened yet - we are averse to change. We only tend to move if we are pushed as the slow transition from IPv4 to IPv6 shows.

Unicode, for example, was originally envisaged as a 16-bit character code but some smart design has allowed 8-bit encoding to provide sufficient flexibility, moderate efficiency and high compatibility with ASCII such that UTF-8 is now the dominant standard on the Internet.

|

Related Links

|

We are looking for people with skills or interest in the following areas:

We are looking for people with skills or interest in the following areas: